Introduction

Character features drift noticeably by panel three in comic sequences from an AI art generator–turning promising strips into disjointed messes that demand full regenerations. This pattern persists even with detailed facial descriptions, revealing the gap between single-image quality and sequential reliability that Ideogram v3's dedicated consistency features finally address through reference uploads and specialized modes.

The challenge intensifies for brand mascots and comics, where visual continuity across ai character image generator assets defines professionalism. Ideogram v3 addresses this through dedicated character consistency features, including reference image uploads and specialized modes that prioritize feature retention over stylistic variation. Platforms aggregating multiple AI models, such as some multi-model prompt strategies like Cliprise, provide access to Ideogram v3 alongside alternatives, allowing creators to test workflows without switching interfaces. When using tools like Cliprise, users can launch Ideogram v3 directly from model pages, streamlining experimentation for brand kits or comic strips.

This ai image generator tutorial delivers a step-by-step workflow drawn from patterns in shared creator outputs, focusing on approaches for sequential panels and mascot variations. Readers will explore why some creators prefer reference-based chaining over prompt-only methods for scenarios involving 4+ panels, based on observations from community-shared results. Readers will also learn how to lock baseline traits early, and pitfalls that can increase iteration counts as seen in various community shares. Missing these insights means repeated challenges in comic arcs or mascot evolutions, where minor drifts compound into less usable assets–wasting time that could build portfolios or campaigns.

Right now, with indie brands leaning on AI for cost-effective mascots and webcomics surging on platforms like Webtoon, mastering Ideogram v3's consistency tools helps distinguish between different levels of creators. Freelancers report prototyping full character bibles as ai art generators more efficiently when sequencing correctly, while agencies use ai logo makers to scale campaign kits with fewer style breaks. Platforms like Cliprise facilitate this by organizing models into categories such as ImageEdit, where Ideogram v3 and its Character variant serve as an ai logo creator ready for such tasks. The stakes involve not just output quality but workflow efficiency: poor sequencing leads to context switching between ai logo maker tools, eroding focus mid-project.

Consider the broader context–AI image generation has evolved from isolated shots to narrative sequences, yet most guides overlook Ideogram v3's nuances, like how reference uploads interact with CFG scales for pose retention. This article dissects those, backed by patterns from observed creator outputs: reference-heavy starts help maintain fidelity across angles and expressions in reported cases. For those accessing via modern solutions like Cliprise, the unified interface means generating a baseline in Veo-inspired prompts before remixing in Ideogram v3, blending video planning with image execution.

Thesis: By following this workflow–baseline definition, reference locking, panel chaining, refinement, and iteration–creators can achieve cross-panel harmony suitable for brand guidelines or publishable comics. We'll contrast it against common errors, compare with Flux 2 and Midjourney via data-driven tables, and highlight when to pivot. Platforms offering Ideogram v3, including some like Cliprise with its 47+ model access, enable rapid validation of these steps without credit silos. Understanding this now positions creators ahead of hybrid tools merging image consistency with video extensions, where early mastery pays dividends.

Prerequisites: Setting Up for Success

Accessing Ideogram v3 requires an account on platforms supporting its API integration, such as certain multi-model aggregators like Cliprise, where it's listed under ImageEdit alongside Ideogram Character. Sign up involves email verification, a step that blocks generation until complete in many setups–skipping it halts workflows prematurely. Free tiers exist, but paid access supports sequential work; platforms like Cliprise handle this via subscription models without upfront credit purchases detailed here.

Basic prompt engineering familiarity proves essential: understanding elements like subject descriptors, style qualifiers, and negative prompts prevents early failures. Creators new to this spend extra cycles on vague inputs like "cartoon fox," which yield inconsistent fur textures. Tools needed include the generation interface (Ideogram v3 via web or app), an image viewer for inspections (browser suffices), and a basic editor for minor crops if references need prep–nothing proprietary. When working in environments like Cliprise's app.cliprise.app, model selection from the /models index simplifies this, with specs on features like character mode visible pre-launch.

Time estimate for setup: around 10 minutes, covering account creation, model navigation, and a test generation. Test with a simple prompt: "front view of a red fox mascot, smiling, detailed eyes, cartoon style." Observe initial consistency before diving deeper. For multi-model users on platforms like Cliprise, cross-reference with Flux 2 for baselines, noting differences in character retention between Ideogram v3 and Flux 2. Download the test output immediately–some interfaces default to public visibility on free plans, a factor in professional use.

Expert perspective: Beginners overlook platform-specific toggles, like enabling "character consistency mode" in Ideogram v3 interfaces; intermediates verify seed reproducibility; pros batch references upfront. This setup phase, though brief, compounds: poor foundations lead to more iterations later. In Cliprise-like solutions, Firebase-integrated analytics track usage, but focus remains on output inspection. Prepare a prompt template document for reuse, listing traits like "asymmetric ear notch, green irises." With these in place, transition to workflows yields measurable fidelity gains.

What Most Creators Get Wrong About Ideogram v3 Character Consistency

Many creators assume descriptive prompts alone suffice for multi-panel consistency, generating isolated panels without a unified sheet–drift accumulates over 3+ panels as facial proportions shift subtly, like widening jaws or elongating snouts. This fails because Ideogram v3 prioritizes prompt interpretation per generation, not memory across sessions; observed in freelancer-shared comic strips where panel 1's hero morphs noticeably by panel 4. Why? Without anchors, the model reinterprets traits variably, amplifying noise in sequences. Platforms like Cliprise expose this when chaining generations, as credit consumption rises with retries.

Another error: neglecting seed values or reference uploads, leading to unnatural pose variations– a mascot's confident stance in panel 1 slouches in panel 2 despite identical descriptors. Examples abound in community forums: a 6-panel adventure where arm lengths fluctuate, forcing manual edits. Seeds lock randomness for reproducibility, while uploads enforce feature mapping; skipping them suits single images but crumbles in comics. In multi-model tools like Cliprise, where Ideogram v3 pairs with seed-supporting models like Flux 2, this gap highlights workflow mismatches.

Over-prompting plagues others, packing 50+ words with attire, background, lighting, and action–style conflicts emerge, like clashing fur shading. User-shared failures document this: brand mascot kits where emotion variants clash tonally. Ideogram v3 thrives on focused inputs; excess dilutes character signals. Contrarian view: less detail often retains more fidelity than verbose engineering.

Treating Ideogram v3 like Midjourney ignores its character focus mode, which weights facial landmarks over artistic flair–Midjourney's remix excels stylistically but drifts identities in sequences according to shared examples. Real scenarios: freelancers prototype comics solo, hitting challenges from prompt reliance; agencies build kits methodically, succeeding via refs. When using Cliprise, creators might default to Midjourney's style refs, missing Ideogram's upload precision. Experts know: mode activation + refs helps prioritize identity over novelty.

These misconceptions inflate costs–time, credits, frustration. Beginners chase prompts; intermediates test seeds; pros systematize refs. Platforms aggregating like Cliprise reveal patterns: Ideogram v3 shines in character tasks when workflows align.

Core Workflow: Step-by-Step Ideogram v3 Character Consistency Tutorial

Step 1: Define Your Character Baseline

Start by crafting an initial prompt emphasizing 5-7 key traits: pose (standing frontally), expression (neutral smile), attire (scarf and vest), plus specifics like "triangular ears with white tips, heterochromia blue-green eyes, bushy tail with black tip." Generate 3-5 variants in Ideogram v3's standard mode. What emerges: consistent facial structure across outputs, as the model locks core anatomy early. In platforms like Cliprise, launch from the ImageEdit category for seamless access.

Common mistake: vague terms like "cute fox character"–results vary wildly in scale and proportion. Use specifics to signal identity over style. Troubleshooting: variations persist? Dial CFG scale to 7-9, balancing adherence without rigidity. Beginners see scatter; intermediates note improved trait retention here. Time: 3-5 minutes. This baseline anchors everything–skipping triples later fixes. Example: coffee shop fox mascot, prompting "anthropomorphic fox barista, apron, holding mug, detailed paw pads." Outputs form a reliable sheet.

Step 2: Generate and Lock Reference Images

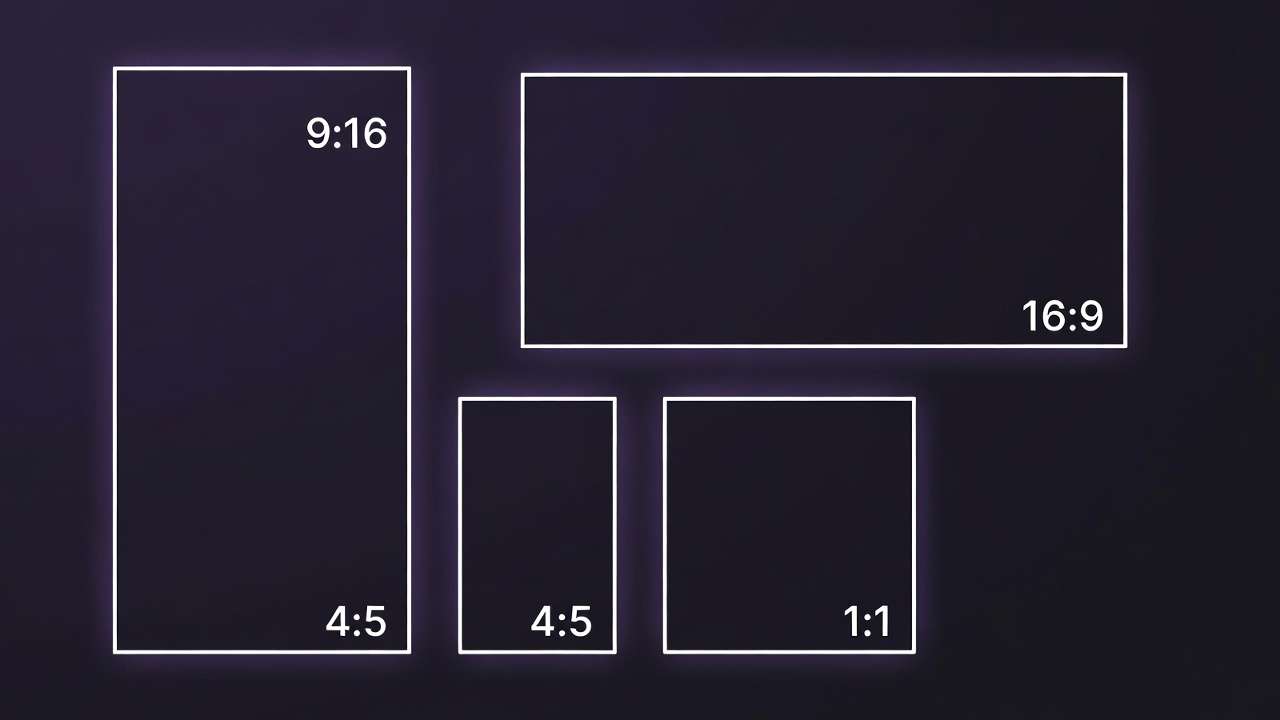

Produce 3-5 views: front, 3/4 side, profile, close-up headshot; activate character consistency mode if available in the interface. Upload a selected baseline as reference for remixes, specifying "same character, [new angle]." Platforms like Cliprise support this via model-specific pages, where specs detail upload capabilities. Time: 5 minutes per set. Notice: higher fidelity in eyes, snout shape across angles–refs map features directly.

Why it works: Ideogram v3 analyzes uploads for keypoints, reducing reinterpretation. Pitfall: low-res refs blur mapping; upscale first. For experts, batch with seeds for variants. Example: profile retains ear notch precisely, unlike prompt-only drifts. In multi-model environments like Cliprise, cross-gen with Imagen 4 for validation.

Step 3: Build Sequential Panels for Comics

Number prompts: "Panel 1: [fox waves hello], same character as reference 1, cartoon style." Use prior panel as input for next: upload panel 1 output for panel 2 continuity. Chain 4-6 panels, maintaining structure: action + ref + negatives like "deformed face, extra limbs." Pitfall: context switch–vary wording breaks chain; copy-paste core. Example: 4-panel strip–panel 1 greet, 2 jump, 3 serve coffee, 4 bow–pose changes but identity holds.

In Cliprise workflows, queue multiple for efficiency. Beginners chain 2-3; pros hit 10 with minimal drift. Observed: ref chaining improves pose retention. Time: 10-15 min for strip.

Step 4: Refine for Brand Mascot Kits

Create packs: 8 emotions (happy, sad), 6 poses (walk, sit), using negatives "inconsistent features, style shift." Specify "consistent volumetric lighting across all." Integrate into guidelines: label sheets "Fox Mascot v1.0." Troubleshooting: lighting mismatches? Lock "diffuse studio light." Example: kit for indie game–emotes align perfectly. Tools like Cliprise enable export to Pro Image Editor for tweaks.

Step 5: Iterate and Export

Upscale finals (Recraft or Topaz via some platforms), compile into sheets/comics. Check harmony: print or zoom for micro-drifts. Iterate: regen outliers with tightened refs. Final: PDF brand bible or Webtoon-ready strip. In Cliprise, download preserves quality.

Real-World Comparisons and Use Cases

Freelancers use reference-heavy flows for quick mascot prototypes, generating 10 variants in 30 minutes versus agencies crafting full bibles over hours with client feedback loops. Solo creators prioritize comic speed, while teams focus on campaign scalability. Approach X (ref uploads) supports scenarios for 10+ panels, Y (prompt-only) for singles–X helps minimize drift in action changes.

Comparison Table: Ideogram v3 vs. Other Tools for Character Consistency

| Scenario | Ideogram v3 (Ref Uploads) | Flux 2 (Seed-Based) | Midjourney (Style Refs) | Time to 4-Panel Comic |

|---|---|---|---|---|

| Brand Mascot (Single Pose Variants) | Facial keypoint retention supported via reference uploads; suitable for sets of emotion variations | Proportions held via fixed seeds across batches; prompt tweaks needed for angle changes | Style maintained in remix mode; character weight adjustments available for feature focus | Varies with reference use |

| Comic Sequence (Action Changes) | Pose retention via chained reference uploads; supports motion shifts in panels | Base locked by seeds but potential drift in dynamic elements; suitable for static sequences | Character reference (--cref) supports identity retention; aids narrative panel flow | Varies with chaining method |

| Multi-Character Scene | Individual fidelity via multiple references; group cohesion depends on prompt structure | Group handling with seeds; performs for solo or simple groups | Character weights support multi-figure consistency; handles group scenes | Varies by scene complexity |

| Low-Prompt Complexity | Direct trait mapping for simple descriptors; quick generations for basic characters | Requires detailed prompts for basic outputs; seed aids consistency | Artistic interpretation helps quick sketches; style refs enhance basics | Varies by prompt detail |

| High-Volume Kits (50+ Assets) | Reference packs support scaling; compatible with queue workflows | Seeds enable batching; suitable for volume with monitoring | Remix supports scaling; style management needed for large sets | Varies by batch setup |

| Learning Curve for Sequences | Upload-based approach for first strips; mode aids intuitiveness | Seed parameter mastery; prompt engineering emphasized | --cref learning; interface-specific workflows | Varies by user experience |

As the table illustrates, Ideogram v3 supports ref-driven retention for comics, while Flux suits seeded simplicity–surprising insight: Midjourney's remix supports multi-character scenes but varies on pure identity focus.

Use case 1: Coffee shop mascot–freelancer starts baseline fox barista (Step 1), refs for poses (Step 2), 4-panel promo (Step 3). Output: kit used in Instagram stories, consistent across posts. In Cliprise, Flux baseline transitions smoothly.

Use case 2: Webcomic hero arc–solo creator chains 6 panels of elf warrior, refining lighting (Step 4). Result: publishable on Tapas, fewer redraws.

Use case 3: Product promo series–agency builds robot mascot variants for ads, iterating exports (Step 5). Scales to 50 assets. Platforms like Cliprise aggregate for A/B with Kling video extensions.

Community patterns: Forums show ref users report fewer retries. Cliprise users leverage model index for hybrids.

When Ideogram v3 Character Consistency Doesn't Help

Hyper-realistic humans challenge Ideogram v3: face drift is noticeable in sequences due to fine details like skin pores, wrinkles–refs capture broad strokes but falter on micro-expressions. Example: portrait comic of executive–panel 3's jawline shifts, breaking immersion. Why? Model training favors stylized over photoreal; switch to Imagen 4 variants.

Complex multi-figure scenes with interactions overwhelm: ref for one character dilutes others, fidelity varies in crowds. Scenario: team mascot comic–group poses vary unnaturally. Better for solos/duos.

Video-focused creators find static limits binding–no native extension to motion, unlike Sora 2. Edge: AR filter needs? Drift in rotations fails.

Avoid if: production pipelines demand high repeatability without refs (e.g., game assets)–seeds alone may be insufficient. Limitations: queue waits in high-demand platforms, ref size caps (under 2MB typical). Unsolved: dynamic lighting sync across panels without manual post.

In Cliprise, pivot to video models when statics insufficient.

Why Order and Sequencing Matter in Character Workflows

Starting with full comics sans baseline triples mental overhead–creators regenerate panels repeatedly, losing prompt context. Why? No anchor leads to redefined traits per panel.

Image-first (refs upfront) reduces retries per forums–batch poses before scenes. Prompt-first suits tests but scales poorly.

Image→seq for comics; prompt→image for ideation. Data: ref pipelines yield coherent 8-panels faster.

In Cliprise, order leverages model chaining.

Industry Patterns and Future Directions

Forum trends show rising AI mascot use for indies–consistent chars drive adoption. Comics shift to AI prototypes.

Changing: multi-ref enhancements. 6-12 months: video hybrids like Veo consistency.

Prepare: master refs now. Cliprise positions for this.

Related Articles

- Motion Control Mastery in AI Video

- Image-to-Video vs Text-to-Video Workflows

- AI Video Resolution Explained: 720p vs 1080p vs 4K Quality Guide

Conclusion

Key steps–baseline, refs, chain, refine–unlock reliability. Next: test on personal project. Platforms like Cliprise enable efficient trials.